Unfortunately, its development has fallen far behind the conventional deep neural network (DNN), mainly because of difficult training and lack of widely accepted hardware experiment platforms. Training becomes similar to DNN thanks to the closed-form solution to the spiking waveform dynamics. In addition, we develop a phase-domain signal processing circuit schema… The error function is calculated as the difference between the output vector from the neural network with certain weights and the training output vector for the given training inputs. A large number of methods are used to train neural networks, and gradient descent is one of the main and important training methods.

Understanding Perceptron in machine learning — INDIAai

Understanding Perceptron in machine learning.

Posted: Tue, 17 Jan 2023 08:00:00 GMT [source]

It consists of finding the gradient, or the fastest descent along the surface of the function and choosing the next solution point. An iterative gradient descent finds the value of the coefficients for the parameters of the neural network to solve a specific problem. In this project, I implemented a proof of concept of all my theoretical knowledge of neural network to code a simple neural network from scratch in Python without using any machine learning library. The resulting values are then passed through the sigmoid activation function to produce the output of the hidden layer, a1.

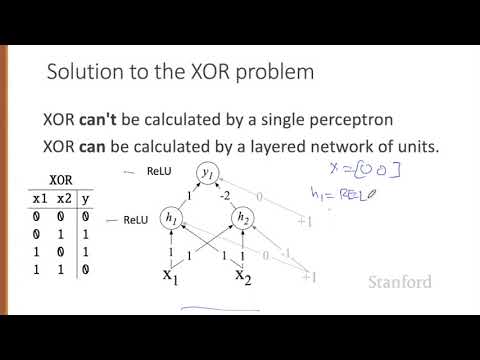

The Multi-layered Perceptron

Matlab in collaboration with Simulink can be used to manually model the Neural Network without the need of any code or knowledge of any coding language. Since Simulink is integrated with Matlab we can also code the Neural Network in Matlab and obtain its mathematically equivalent model in Simulink. Also, Matlab has a dedicated tool in its library to implement neural https://traderoom.info/atfx-a-reliable-broker/ network called NN tool. Using this tool, we can directly add the data for input, desired output, or target. The values of the weights and biases determine how the neural network processes the inputs and makes predictions. In this case, the neural network has learned to perform the XOR operation accurately, as demonstrated by the predictions on the test input [1, 0].

A good resource is the Tensorflow Neural Net playground, where you can try out different network architectures and view the results. So if you want to find out more, have a look at this excellent article by Simeon Kostadinov. Finally, we need an AND gate, which we’ll train just we have been. The ⊕ (“o-plus”) symbol you see in the legend is conventionally used to represent the XOR boolean operator.

1.1. Generate Fake Data¶

Hopefully, this post gave you some idea on how to build and train perceptrons and vanilla networks. The algorithm only terminates when correct_counter hits 4 — which is the size of the training set — so this will go on indefinitely. Here, we cycle through the data indefinitely, keeping track of how many consecutive datapoints we correctly classified. If we manage to classify everything in one stretch, we terminate our algorithm. We know that a datapoint’s evaluation is expressed by the relation wX + b . This is often simplified and written as a dot- product of the weight and input vectors plus the bias.

Finally, the function returns the learned weights and biases, as well as an array of the cost values over the training epochs. The cost of the neural network’s predictions is then calculated using the binary cross-entropy loss function. Going back to the original parameters of 0.01 learning rate and 10,000 iterations, the classification decision boundary plot was also created (the full code for this graph is included in the appendix). 13 is quite close to the final goal that the ANN was created to do.

1.4. Update the Weights using Gradient Decent¶

Remember the linear activation function we used on the output node of our perceptron model? You may have heard of the sigmoid and the tanh functions, which are some of the most popular non-linear activation functions. This paper presents a case study of the analysis of local minima in feedforward neural networks. Firstly, a new methodology for analysis is presented, based upon consideration of trajectories through weight space by which a training algorithm might escape a hypothesized local minimum. This analysis method is then applied to the well known XOR (exclusive-or) problem, which has previously been considered to exhibit local minima. The analysis proves the absence of local minima, eliciting significant aspects of the structure of the error surface.

A dynamic AES cryptosystem based on memristive neural network … — Nature.com

A dynamic AES cryptosystem based on memristive neural network ….

Posted: Thu, 28 Jul 2022 07:00:00 GMT [source]

Additionally, one can try using different activation functions, different architectures, or even building a deep neural network to improve the performance of the model. Finally, one can explore more advanced techniques like convolutional neural networks, recurrent neural networks, or generative adversarial networks for more complex problems. The output of the hidden layer is then multiplied by the weight matrix w2, and the bias vector b2 is added. The resulting values are passed through the sigmoid activation function to produce the final output of the neural network, y_pred. The code uses the Python programming language and the NumPy library for numerical computations. The neural network is implemented using a feedforward architecture, with one hidden layer and one output layer.

What is a neural network?

For the backward pass, the new weights are calculated based on the error that is found. In the ANN, the forward pass of the network refers to the calculation of the output by considering all the inputs, weights, biases, and activation functions in the various layers. Backpropagation is an algorithm for update the weights and biases of a model based on their gradients with respect to the error function, starting from the output layer all the way to the first layer.

- By defining a weight, activation function, and threshold for each neuron, neurons in the network act independently and output data when activated, sending the signal over to the next layer of the ANN [2].

- This allows for the plotting of the errors over the training process.

- Connect and share knowledge within a single location that is structured and easy to search.

- In this article, we have explored the implementation of a neural network in Python to perform the XOR operation using backpropagation.

- I hope that the mathematical explanation of neural network along with its coding in Python will help other readers understand the working of a neural network.

To find the minimum of a function using gradient descent, we can take steps proportional to the negative of the gradient of the function from the current point. Created by the Google Brain team, TensorFlow presents calculations in the form of stateful dataflow graphs. The library allows you to implement calculations on a wide range of hardware, from consumer devices running Android to large heterogeneous systems with multiple GPUs. 🤖 Artificial intelligence (neural network) proof of concept to solve the classic XOR problem. It uses known concepts to solve problems in neural networks, such as Gradient Descent, Feed Forward and Back Propagation.

However, it is limited in its ability to represent complex functions and to generalize to new data. Despite these limitations, a two layer neural network is a useful tool for representing the XOR function. One neuron with two inputs can form a decisive surface in the form of an arbitrary line. In order for the network to implement the XOR function specified in the table above, you need to position the line so that the four points are divided into two sets. Trying to draw such a straight line, we are convinced that this is impossible.

With the structure inspired by the biological neural network, the ANN is comprised of multiple layers — the input layer, hidden layer(s), and output layer — of nodes that send signals to each other. To find solution for XOR problem we are considering two widely used software that is being relied on by many software developers for their work. The first software is Google Colab tool which can be used to implement ANN programs by coding the neural network using python. This tool is reliable since it supports python language for its implementation.The other major reason is that we can use GPU and TPU processors for the computation process of the neural network. The next software that can be used for implementing ANN is Matlab Simulink.